Editor’s Note: Regulatory frameworks across the globe are increasingly influencing the pace and scope of innovation. In a recent development, Meta’s ambitious AI expansion has encountered significant resistance in Europe and the United States due to privacy concerns and regulatory hurdles. This article explores the implications of these challenges for the broader tech industry, particularly in the realms of cybersecurity, information governance, and eDiscovery. The scrutiny faced by Meta underscores the critical balance between fostering innovation and upholding stringent data protection standards. This dynamic is particularly pertinent for professionals navigating the complexities of compliance and technological advancement in today’s digital economy.

Content Assessment: Meta's AI Expansion Faces Regulatory Roadblocks in Europe and the US

Information - 92%

Insight - 90%

Relevance - 90%

Objectivity - 92%

Authority - 88%

90%

Excellent

A short percentage-based assessment of the qualitative benefit expressed as a percentage of positive reception of the recent article from ComplexDiscovery OÜ titled, "Meta's AI Expansion Faces Regulatory Roadblocks in Europe and the US."

Industry News – Artificial Intelligence Beat

Meta’s AI Expansion Faces Regulatory Roadblocks in Europe and the US

ComplexDiscovery Staff

Meta has recently faced significant hurdles in rolling out its AI offerings in Europe, citing privacy concerns and regulatory barriers as primary reasons for the delay. Under its new privacy policy proposal, Meta aimed to use publicly shared content from Facebook and Instagram since 2007 to train its AI models. However, this initiative met with strong opposition from the Irish Data Protection Commission and other European data protection authorities (DPAs).

The use of public social media data was defended by Meta as being in line with their stated interests under EU General Data Protection laws. Yet, without the integration of this local data, Meta claims that the experience it offers will be “second-rate.” The tech giant expressed disappointment over the forced delay, stating, “We’re disappointed by the request from the Irish Data Protection Commission, our lead regulator, on behalf of the European DPAs, to delay training our large language models (LLMs) using public content shared by adults on Facebook and Instagram—particularly since we incorporated regulatory feedback and the European data protection authorities (DPAs) have been informed since March.”

Meta argues that this regulatory action is a setback for European innovation and competition in AI development, potentially delaying the benefits of AI for European users. The company contends that its practices are more transparent compared to rivals like Google and OpenAI, which also use vast amounts of data for training their models. Jake Moore, a global cybersecurity advisor at ESET, emphasized the necessity of large datasets in training AI, noting, “Artificial intelligent algorithms need ‘an extortionate amount of data’ to process a level of output that is expected to pass for human consumption.”

In addition to these challenges in Europe, Meta’s practices in the United States have sparked concerns among privacy advocates. The company has been actively using public data from U.S. social media accounts for its generative AI features without providing users an opt-out option. This has raised significant privacy concerns as U.S. data protection laws differ from those in the EU, often lacking robust consumer protections and opt-out provisions.

Meta’s spokesperson has repeatedly highlighted that the use of public data for AI training is a common industry practice. “Across the internet, public information is being used to train AI. This is not unique to our services,” the spokesperson stated, reinforcing the company’s commitment to responsible AI development.

In the AI space, Meta has introduced ‘AI Studio’ on Instagram as a test in the U.S., allowing creators to develop AI versions of themselves to interact with followers. However, these developments have not been without scrutiny. Factors such as data privacy, misinformation, and ethical implications are under continuous examination.

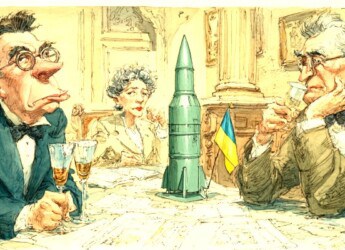

Meanwhile, Meta is not alone in feeling the sting of stringent European regulations. Apple has also announced that its AI features, such as Apple Intelligence, iPhone Mirroring, and SharePlay Screen Sharing, may not reach European Union customers this year due to the Digital Markets Act (DMA). Apple expressed concerns that compliance with the DMA would compromise privacy and security standards, with an Apple spokesperson saying, “We are committed to collaborating with the European Commission in an attempt to find a solution that would enable us to deliver these features to our EU customers without compromising their safety.”

The challenges faced by these tech giants underscore the complex balance between innovation, regulation, and privacy. Meta’s commitment to continue pushing its AI developments in Europe highlights the importance of regulatory clarity and industry cooperation to foster technological advancement without compromising consumer rights.

News Sources

- Apple likely won’t release its new AI features in Europe amid regulatory concerns

- Meta steps up criticism of California Democrats trying to regulate AI: ‘Unrealistic’

- Instagram is starting to let some creators make AI versions of themselves

- How to Stop Mark Zuckerberg from Sucking Up Your Posts to Feed Meta’s AI Monster

- Meta Just Delayed Its EU AI Roll Out—Here’s Why It Matters

Assisted by GAI and LLM Technologies

Additional Reading

- Combating AI Hallucinations: Oxford Researchers Develop New Detection Method

- U.S. Proposes New AI Export Controls Amid Security Concerns

Source: ComplexDiscovery OÜ