Editor’s Note: AI is reshaping the battlefield, but who—or what—controls the decision to strike? As Artificial Intelligence Decision Support Systems (AI DSS) enter military operations, a growing chorus of legal and cybersecurity experts is questioning whether these tools can align with the principles of International Humanitarian Law. This article, anchored by insights from CyCon 2025 in Tallinn, explores the dual imperatives of operational efficiency and ethical oversight in military AI deployment.

From NATO’s responsible AI strategy to India’s trust-based framework and the United Nations’ push for global governance, the legal and cybersecurity dimensions of AI DSS are converging. For cybersecurity, legal tech, and information governance professionals, this piece provides a vital look at the high-stakes interplay between emerging technologies and the enduring need for human accountability.

Content Assessment: Securing the Algorithm: AI Decision Systems, Military Operations, and the Rule of Law

Information - 94%

Insight - 93%

Relevance - 90%

Objectivity - 91%

Authority - 92%

92%

Excellent

A short percentage-based assessment of the qualitative benefit expressed as a percentage of positive reception of the recent article from ComplexDiscovery OÜ titled, "Securing the Algorithm: AI Decision Systems, Military Operations, and the Rule of Law."

Industry News – Geopolitical Beat

Securing the Algorithm: AI Decision Systems, Military Operations, and the Rule of Law

ComplexDiscovery Staff

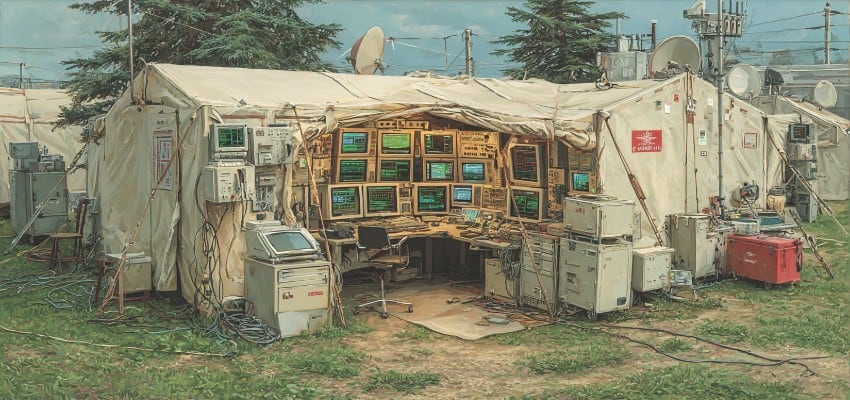

Imagine a battlefield where decisions about life and death are shaped not by human commanders, but by algorithms analyzing satellite data, behavioral patterns, and threat matrices in real time. As Artificial Intelligence Decision Support Systems (AI DSS) transition from theory to operational reality, one question becomes increasingly urgent: can these systems uphold the humanitarian principles that define lawful warfare?

The integration of Artificial Intelligence Decision Support Systems (AI DSS) into battlefield decision-making is forcing a fundamental reassessment of how military power is exercised under International Humanitarian Law (IHL). What was once the domain of human judgment is now increasingly influenced by algorithmic systems—challenging the delicate balance between operational efficiency and the legal and ethical imperatives that protect human dignity in conflict.

Rethinking Human Judgment in AI-Augmented Warfare

Central to the debate is whether AI DSS can be embedded into military operations without eroding the human-centric foundations of IHL. These systems promise to enhance decision-making with speed and accuracy, especially in force deployment and threat assessments. Yet the assumption of algorithmic neutrality is being met with growing skepticism.

At the 2025 CyCon conference in Tallinn, Estonia, legal and military experts gathered to examine these tensions. Dr. Anna Rosalie Greipl of the Geneva Academy, speaking on the panel “International Law Perspectives on AI in Armed Conflict,” raised concerns that integrating AI DSS into command structures could distort legal decision-making processes. Her analysis pointed to a dangerous shift: as AI encroaches on decisions traditionally made by humans, there is a risk that algorithmic outcomes will deviate from the rational and equitable standards enshrined in humanitarian law.

These concerns are far from academic. They underscore a broader fear that increased reliance on automation may introduce ethical lapses and reinforce opaque biases—especially when deployed in high-stakes, time-sensitive combat scenarios.

Safeguards, Oversight, and the Role of Governance

As nations test the limits of military AI, the importance of embedding safeguards within AI DSS architecture becomes paramount. These systems should support—not supplant—human oversight. Institutions such as NATO and the United Nations have articulated frameworks emphasizing responsible AI deployment. NATO’s 2021 AI Strategy and its 2023 Data and AI Review Board exemplify a growing emphasis on legal accountability, transparency, and meaningful human control.

India has also emerged as a pivotal voice. The Evaluating Trustworthy Artificial Intelligence (ETAI) Framework, introduced by the Defence Research and Development Organisation in October 2024, outlines five principles for responsible AI deployment in defense. The framework represents a proactive step toward embedding ethical standards and operational transparency. However, translating such frameworks from policy to practice remains a formidable challenge.

Global Divergence: Cooperation and Contestation

The geopolitical landscape surrounding military AI is anything but uniform. China and Russia have adopted alternative approaches to global AI governance, resisting alignment with US-led initiatives. While China supported the REAIM 2023 Call to Action, neither nation has joined multilateral declarations such as the US-led Political Declaration on the Responsible Military Use of Artificial Intelligence and Autonomy, which by late 2024 had garnered endorsements from nearly 60 countries.

Meanwhile, institutions such as the UN Group of Governmental Experts continue to seek consensus on regulating Lethal Autonomous Weapons Systems (LAWS). India, for its part, advocates for outcome-based evaluations rather than rigid definitions, underscoring a pragmatic approach rooted in use-case accountability.

The European Union has also demonstrated nuanced leadership. Its landmark AI Act—while explicitly exempting military applications under Article 2(3)—nonetheless showcases regulatory agility, illustrating how states can build dynamic oversight mechanisms without legislative paralysis.

Toward an Ethically Anchored AI Future

Despite divergent global approaches, domestic institutions with regulatory and technical capacity can pioneer tailored safeguards that respect local legal cultures while aligning with IHL. The Global Commission on Responsible Artificial Intelligence in the Military Domain (GC REAIM), launched in 2023, continues to draft global guidelines, with its final report expected by the end of 2025.

Yet, it is clear that governance structures must evolve in lockstep with technological capabilities. The promise of AI-enhanced defense cannot eclipse the foundational norms that define the lawful conduct of war.

Reclaiming the Human in the Loop

The prospect of AI guiding battlefield decisions is no longer hypothetical—it is imminent. With this shift comes a renewed obligation to ensure these systems reflect not only tactical logic but the humanitarian values that separate strategy from savagery. As international actors debate the frameworks needed to govern AI DSS, the stakes are too high for inaction.

And so, we return to the opening provocation: if an algorithm can deploy force, who ensures it does so justly? The answer lies not in abdicating human responsibility to machines, but in building AI systems that are accountable to the very laws designed to protect humanity in war.

News Sources

- CyCon 2025 Series – Artificial Intelligence in Armed Conflict: The Current State of International Law (Lieber Institute West Point)

- CyCon 2025 Series – Deciding with AI Systems: Rethinking Dynamics in Military Decision-Making (Lieber Institute West Point)

- Military AI Challenges Human Accountability (Center for International Policy)

- How AI will radically change military command structures (Fast Company)

- India’s Strategic Push for Ethical Leadership in Military AI – (SSBCrack News)

- AI Revolutionizing Military Command Structures to Combat Napoleonic Era Constraints (SSBCrack News)

Assisted by GAI and LLM Technologies

Additional Reading

- From Karelia to Kyiv: Sovereignty Tested from Finland’s Winter War to Ukraine’s Modern Struggle

- How Ukraine Built a National Cyber Defense to Withstand Russian Aggression

- Engineering Cyber Resilience: Lessons from the Tallinn Mechanism

- University of Exeter and CCDCOE Publish Cyber Law Handbook Guiding Nation States in Peace and Conflict

Source: ComplexDiscovery OÜ