Editor’s Note: The rapid evolution of deepfake technology, a sophisticated byproduct of artificial intelligence, has emerged as a significant challenge within digital media landscapes, affecting sectors from entertainment to politics. This article delves into the multifaceted efforts to mitigate the misuse of deepfakes—highlighting the crucial roles played by tech giants like Microsoft and OpenAI, and governmental bodies worldwide. As these entities pioneer technologies and regulations to combat the proliferation of AI-generated misinformation, the discourse also encompasses ethical concerns, legal ramifications, and the broader societal impacts. For professionals in cybersecurity, information governance, and eDiscovery, understanding these developments is paramount, not only to navigate the complexities of digital content authenticity but also to bolster defenses against the potential threats posed by AI innovations.

Content Assessment: The Global Struggle Against Deepfake Technology: A Critical Overview

Information - 94%

Insight - 92%

Relevance - 90%

Objectivity - 92%

Authority - 90%

92%

Excellent

A short percentage-based assessment of the qualitative benefit expressed as a percentage of positive reception of the recent article from ComplexDiscovery OÜ titled "The Global Struggle Against Deepfake Technology: A Critical Overview."

Industry News – Artificial Intelligence Beat

The Global Struggle Against Deepfake Technology: A Critical Overview

ComplexDiscovery Staff

Deepfake technology, a byproduct of advanced artificial intelligence (AI), has rapidly transformed the landscape of digital media, presenting new challenges in the fight against misinformation. This technology, capable of generating highly realistic fake or altered digital content, is making waves across various sectors, from entertainment to politics, raising ethical concerns and sparking debates about the authenticity of digital content.

As the technology grows more sophisticated, entities like Microsoft, OpenAI, and various government agencies are at the forefront of combating its misuse. For instance, Microsoft has launched initiatives to detect and mitigate the effects of deepfakes, particularly in the context of political misinformation. Similarly, OpenAI, known for its cutting-edge AI research, has introduced a tool that can distinguish between AI-generated images and real ones with an impressive accuracy rate of 99%.

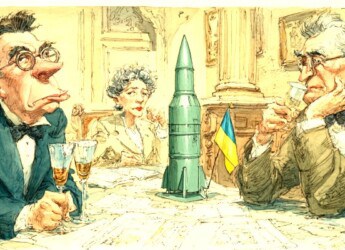

Notable personalities such as Warren Buffett and Narendra Modi have voiced their concerns about the potential dangers of deepfake technology. Buffett likened AI’s impact to that of nuclear technology, suggesting it has the power to cause significant harm if not properly managed. On the other hand, Modi has identified misinformation spread through deepfakes as a significant threat in the context of India’s elections, labeling the 2024 general elections as India’s first AI election.

Efforts to regulate and control the misuse of deepfake technology extend beyond individual companies to geopolitical considerations. The U.S. government, through agencies like the Department of Defense and the Federal Trade Commission (FTC), is investing in research and setting regulations to prevent deepfakes from being used for fraud or to undermine democracy.

In Europe, the EU Digital Services Act proposes strict measures to hold online platforms accountable for the content they host, including deepfakes. This legislation is part of a broader effort to create an enforcement ecosystem that addresses the complexities introduced by AI-generated content.

The battle against deepfake technology is a global one, with countries from the United States to China and South Korea implementing measures to protect the integrity of digital content. While the potential benefits of AI in enhancing digital media and user experiences are vast, the imperative to safeguard against its misuse is clear. The collective effort from tech giants, regulatory bodies, and international bodies is crucial to ensuring that the future of digital media remains secure and trustworthy.

The proliferation of deepfake technology has also led to the development of new academic fields and research initiatives aimed at understanding and countering its effects. Universities and research institutions worldwide are dedicating resources to study the psychological impact of deepfakes, the technical aspects of their creation, and the societal implications of their spread. These studies are critical in developing more effective detection tools and educating the public about the risks associated with deepfake content.

Furthermore, the entertainment industry is grappling with the ethical implications of using deepfake technology. While it offers filmmakers and content creators new avenues for creativity, such as de-aging actors or resurrecting deceased performers, it also raises questions about consent and the authenticity of artistic expression. Industry guidelines and ethical standards are being discussed to navigate these challenges responsibly.

On the legal front, lawmakers in various countries are considering legislation specifically targeting the malicious use of deepfakes. These laws aim to criminalize the creation and distribution of deepfakes intended to harm individuals, spread false information, or interfere with democratic processes. Legal experts emphasize the importance of balancing these measures with the protection of free speech and innovation in AI technology.

In the realm of cybersecurity, deepfakes pose a unique threat to personal and national security. Cybercriminals can use deepfake technology to impersonate individuals in video calls, create convincing phishing campaigns, or spread disinformation to destabilize economies and societies. As a result, cybersecurity firms are developing advanced detection algorithms and training their systems to recognize and flag deepfake content, enhancing the security of digital communications and information.

The fight against deepfake technology is multifaceted, involving technological innovation, legal and ethical considerations, and international cooperation. As AI continues to evolve, so too will the strategies to mitigate its potential harms. The ongoing dialogue among tech companies, governments, civil society, and the public is essential in shaping a digital future that harnesses the benefits of AI while protecting against its risks.

News Sources

- The Deepfake Menance – AI’s Double-Edged Sword in Digital Era

- Meeting the moment: combating AI deepfakes in elections through today’s new tech accord

- OpenAI Introduces Advanced ‘Deepfake’ Detector to Combat Misinformation

- Fighting Election Deepfakes: Microsoft, OpenAI Pump $2 Million Fund Aims to Counter AI Misinformation

- Deepfakes and Democracy: Combatting Disinformation in the 2024 Election

Assisted by GAI and LLM Technologies

Additional Reading

- U.S. Proposes New AI Export Controls Amid Security Concerns

- From Silicon Valley to Sacramento: California’s Bold AI Regulatory Framework

Source: ComplexDiscovery OÜ