Editor’s Note: The paper Detection and Measurement of Syntactic Templates in Generated Text provides valuable insights for professionals in legal technology, cybersecurity, and information governance. By focusing on syntactic templates, the research uncovers how large language models (LLMs) replicate structural patterns found in their training data. This revelation raises important questions about content originality, model transparency, and the potential risks of memorization in AI outputs. For those concerned with the integrity and reliability of AI-generated content, the findings of this study are timely and essential.

Content Assessment: Uncovering Repetition: How Syntactic Templates Reveal Patterns in AI-Generated Text

Information - 93%

Insight - 92%

Relevance - 92%

Objectivity - 92%

Authority - 93%

92%

Excellent

A short percentage-based assessment of the qualitative benefit expressed as a percentage of positive reception of the recent article from ComplexDiscovery OÜ titled, "Uncovering Repetition: How Syntactic Templates Reveal Patterns in AI-Generated Text."

Industry News – Artificial Intelligence Beat

Uncovering Repetition: How Syntactic Templates Reveal Patterns in AI-Generated Text

ComplexDiscovery Staff*

In an era defined by the accelerating capabilities of large language models (LLMs), understanding the true originality of their outputs has become a pressing concern for professionals in fields such as legal technology and information governance. While AI-generated content continues to improve in fluency and utility, the research paper Detection and Measurement of Syntactic Templates in Generated Text by Chantal Shaib, Yanai Elazar, Junyi Jessy Li, and Byron C. Wallace sheds new light on the subtleties of repetition embedded within these outputs. This groundbreaking study introduces the concept of syntactic templates as a means to evaluate repetition beyond simple word-level analysis, providing a new framework to understand how LLMs generate text and the extent to which training data influences this process.

Syntactic Templates: A Deeper Dive into AI-Generated Content

For years, assessments of AI outputs have largely concentrated on surface-level attributes, such as token diversity, to measure novelty. This approach, however, misses a crucial layer of language analysis—syntactic structure. The study fills this gap by focusing on syntactic templates, defined as sequences of part-of-speech (POS) tags that reveal patterns of sentence construction.

Consider this example: while the phrase “a romantic comedy about a corporate executive” and the summary “a humorous insight into the perceived class” have only one overlapping word, they share a common syntactic template—DT JJ NN IN DT JJ NN. Such patterns help identify more nuanced repetitions in AI text generation. By analyzing these templates, the study provides insights into how LLMs rely on structural repetition, often sourced directly from their training data.

Why Repetition Matters

For lawyers and legal technology professionals, understanding the origins and structures of AI-generated text is more than an academic exercise; it’s a matter of maintaining content integrity and ensuring model transparency. Repetitive structures might indicate a lack of true creativity or could point to overfitting on specific datasets, raising questions about the reliability and originality of AI-generated documents.

The paper addresses this by posing critical questions: To what extent do LLM outputs contain these templates? Can we trace these structures back to the training data, and what implications does this have for data memorization and content verification?

Measuring Templates: Methodology and Metrics

To answer these questions, the study introduces a framework for detecting and measuring syntactic templates. By leveraging part-of-speech tagging, the researchers focused on identifying and quantifying repeated POS sequences in generated text, using metrics such as:

- CR-POS (Compression Ratio of POS): This metric measures the redundancy of POS-tagged text, offering a glimpse into how compressible and repetitive the syntactic structure of a document is.

- Template Rate: Indicates the percentage of texts within a corpus that contain at least one identified template, revealing the prevalence of such structures.

- Templates-per-Token: Normalizes the number of templates by text length to compare outputs across various sources.

The researchers applied these measures to assess outputs from a range of models, including both open-source and proprietary systems. Their work spanned open-ended generation tasks, synthetic data creation, and summarization exercises using datasets like Rotten Tomatoes movie reviews and the Cochrane medical summaries.

Key Findings

The results are both illuminating and cautionary. The study found that LLMs frequently generate text containing templates that also appear in their pre-training data. For example, about 76% of the templates detected in model-generated text were present in the pre-training datasets, compared to only 35% in human-authored texts. This pattern held even in models that had undergone fine-tuning and reinforcement learning from human feedback (RLHF).

Comparing Models: A Spectrum of Templated Outputs

The analysis revealed that while human-written summaries in the Rotten Tomatoes dataset contained templates in only 38% of cases, model outputs showed a significantly higher rate, averaging around 95%. Even when adjusting for length, AI-generated text demonstrated a higher frequency of templates-per-token than human-authored counterparts.

Style Memorization: Beyond Exact Matches

One of the most compelling aspects of the research is its examination of “style memorization,” where models reproduce structures or syntactic forms without duplicating exact phrases. This form of memorization involves synonymous substitutions or slight numerical adjustments that preserve the underlying pattern. The study found that using this broader definition, 6.4% of sequences showed stylistic repetition compared to 5.3% identified by exact-text matches.

Implications and Future Directions

The findings have significant implications for how legal professionals, content creators, and AI developers approach model training and evaluation. The prevalence of syntactic templates suggests that LLMs’ training data heavily influences their output, which could be problematic when content originality is paramount.

Moreover, the exploration of style memorization points to a need for more nuanced criteria in assessing AI-generated text. While this research serves as an initial foray into the topic, future work could expand to cover non-English languages, more complex syntactic constructs, and domain-specific applications.

A New Lens on LLM Behavior

Understanding the subtle, structural repetition in AI outputs is more than an academic pursuit; it is essential for fields that rely on precision, originality, and transparency. This research provides a foundation for business and legal professionals to better grasp the intricacies of LLM behavior and its implications for content authenticity and intellectual property.

As models continue to evolve, so too must our methods for evaluating their outputs. The concept of syntactic templates offers an important step in that direction, emphasizing that true diversity in AI-generated content involves more than just a varied vocabulary—it requires an analysis of the structures that underpin the text. For those navigating the intersection of technology and law, such insights are invaluable.

News Sources

- Detection and Measurement of Syntactic Templates in Generated Text

- Detection and Measurement of Syntactic Templates in Generated Text (HTML)

Assisted by GAI and LLM Technologies

*Reported on with permission per Creative Commons (CC BY- 4.0).

Additional Reading

- The Dual Impact of Large Language Models on Human Creativity: Implications for Legal Tech Professionals

- AI Regulation and National Security: Implications for Corporate Compliance

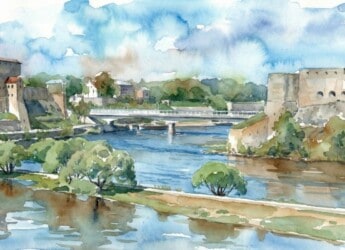

Source: ComplexDiscovery OÜ