ARCHIVED CONTENT

You are viewing ARCHIVED CONTENT released online between 1 April 2010 and 24 August 2018 or content that has been selectively archived and is no longer active. Content in this archive is NOT UPDATED, and links may not function.

Predictive Coding Technologies: An Algorithmic Overview

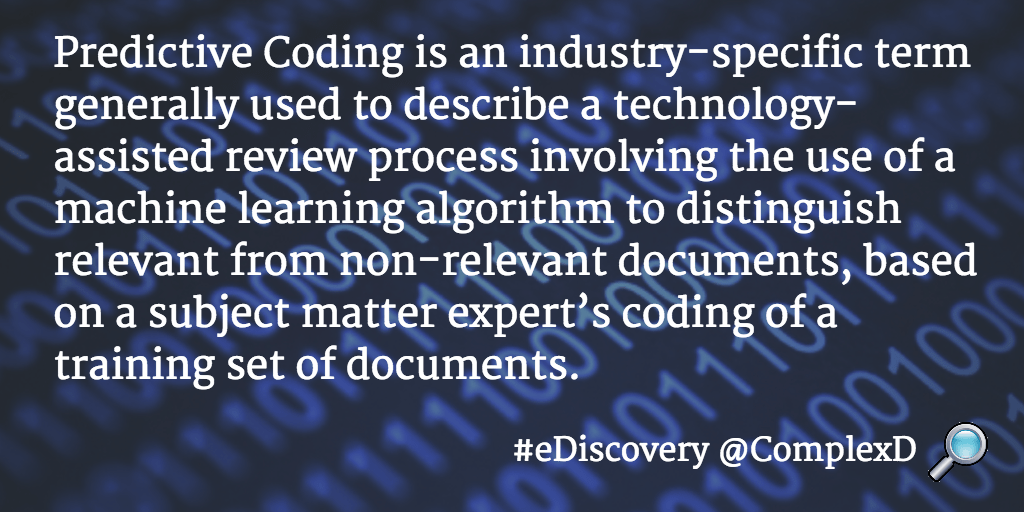

As defined in The Grossman-Cormack Glossary of Technology-Assisted Review*, Predictive Coding is an industry-specific term generally used to describe a technology-assisted review process involving the use of a machine learning algorithm to distinguish relevant from non-relevant documents, based on a subject matter expert’s coding of a training set of documents. This definition provides a baseline description that identifies one particular function that a general set of commonly accepted machine learning algorithms may used for in technology-assisted review.

With the growing awareness and use of the technology-assisted review feature of predictive coding in the legal arena today, it appears that it is increasingly more important for electronic discovery professionals to have a general understanding of the algorithm approaches that may be implemented in electronic discovery platforms to facilitate predictive coding of electronically stored information. This general understanding is important as each potential algorithmic approach has efficiency advantages and disadvantages that may impact the efficacy of predictive coding.

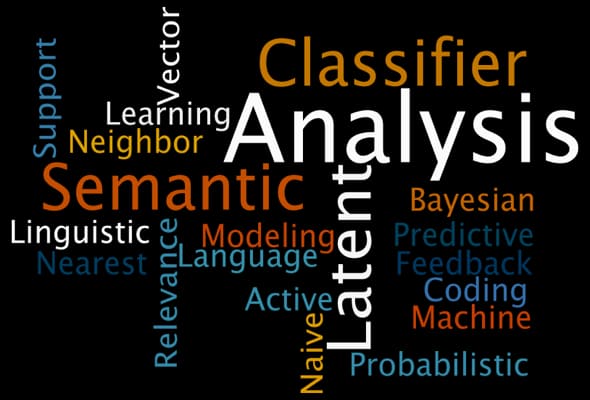

A Working List of Predictive Coding Technologies

Provided below is a working list of identified machine learning approaches that have been applied or have the potential to be applied to the discipline of eDiscovery to facilitate predictive coding. This non all inclusive working list is designed to provide a reference point for identified predictive coding technologies and may over time include additions, adjustments and/or amendments based on feedback from experts and organizations applying and implementing these technologies in their specific eDiscovery platforms.

Listed in Alphabetical Order

- Active Learning: An iterative process that presents for reviewer judgment those documents that are most likely to be misclassified. In conjunction with Support Vector Machines, it presents those documents that are closest to the current position of the separating line. The line is moved if any of the presented documents has been misclassified.

- Language Modeling: A mathematical approach that seeks to summarize the meaning of words by looking at how they are used in the set of documents. Language modeling in predictive coding builds a model for word occurrence in the responsive and in the non-responsive documents and classifies documents according to the model that best accounts for the words in a document being considered.

- Latent Semantic Analysis: A mathematical approach that seeks to summarize the meaning of words by looking at the documents that share those words. LSA builds up a mathematical model of how words are related to documents and lets users take advantage of these computed relations to categorize documents.

- Linguistic Analysis: Linguists examine responsive and non-responsive documents to derive classification rules that maximize the correct classification of documents.

- Logistical Regression is well-accepted by computer science and information retrieval communities as a sound statistical modeling approach for data analysis and predictive modeling. Logistic regression is a form of supervised learning, in that a logistic regression model is produced by “training” on a set of documents that have been manually categorized. Once trained, the logistic regression model can be used to estimate the probability that a new document belongs to each of the possible categories. The model can use both content features such as words and phrases, and metadata features such as custodian, date, file type, and contextual information. They can be applied to data sets with millions of documents and billions of content features, and are one of the most effective approaches in a wide range of text and data mining tasks.

- Naïve Bayesian Classifier: A system that examines the probability that each word in a new document came from the word distribution derived from trained responsive document or from trained non-responsive documents. The system is naïve in the sense that it assumes that all words are independent of one another.

- Nearest Neighbor Classifier: A classification system that categorizes documents by finding an already classified example that is very similar (near) to the document being considered. It gives the new document the same category as the most similar trained example.

- Probabilistic Latent Semantic Analysis: A second mathematical approach that seeks to summarize the meaning of words by looking at the documents that share those words. PLSA builds up a mathematical model of how words are related to documents and lets users take advantage of these computed relations to categorize documents.

- Probabilistic Hierarchical Context-Free Grammars approach to machine learning. In this approach structures are built up by applying a sequence of context-free rewrite rules, where each rule in the sequence is selected independently at random.

- Relevance Feedback: A computational model that adjusts the criteria for implicitly identifying responsive documents following feedback by a knowledgeable user as to which documents are relevant and which are not.

- Support Vector Machine: A mathematical approach that seeks to find a line that separates responsive from non-responsive documents so that, ideally, all of the responsive documents are on one side of the line and all of the non-responsive ones are on the other side.

Additional approaches that can be used to augment or in lieu of core textual analytics limited predictive coding algorithms include:

- Visual Classification: Visual Classification groups documents based on their appearance. This normalizes documents regardless of the types of files holding the content. This approach is enabled by glyph recognition. Glyph Recognition is process of associating text values with the glyphs present in a document.

Click here to provide specific additions, corrections and/or updates.

References

*The Grossman-Cormack Glossary of Technology-Assisted Review (2013 Fed. Cts.L. Rev. 7) by Maura Grossman and Gordan Cormack. EDRM.