Editor’s Note: The issue of ‘hallucinations’ in generative AI models such as ChatGPT has become a significant concern, as these AI systems sometimes produce confident but incorrect information. Researchers, particularly from the University of Oxford, have been developing methods to detect and mitigate these hallucinations. A recent study proposes a new technique involving ‘semantic entropy’ to identify when an AI model is likely to be confabulating, or making up answers. This method could increase the reliability of AI systems in high-stakes scenarios, though experts caution that completely eliminating hallucinations may not be possible in the near term.

Content Assessment: Combating AI Hallucinations: Oxford Researchers Develop New Detection Method

Information - 94%

Insight - 93%

Relevance - 91%

Objectivity - 90%

Authority - 92%

92%

Excellent

A short percentage-based assessment of the qualitative benefit expressed as a percentage of positive reception of the recent article from ComplexDiscovery OÜ titled, "Combating AI Hallucinations: Oxford Researchers Develop New Detection Method."

Industry News – Artificial Intelligence Beat

Combating AI Hallucinations: Oxford Researchers Develop New Detection Method

ComplexDiscovery Staff

Generative AI technologies like ChatGPT and Google’s Gemini, while revolutionary, have a significant issue known as “hallucination,” where the AI confidently provides inaccurate or entirely fabricated information. This problem has led to notable public debacles, such as when Google Bard made erroneous claims about the James Webb telescope, severely impacting Google’s stock price. Similarly, in February, AirCanada was compelled by a tribunal to honor a chatbot’s erroneous discount offer to a passenger, showcasing how these inaccuracies can have financial consequences.

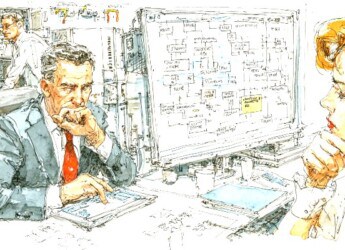

Researchers from the University of Oxford are actively exploring ways to curb AI hallucinations. A new study published in Nature outlines a method to detect when AI-generated answers might be incorrect. The researchers focus on a specific type of hallucination known as confabulation, where the AI gives varied wrong answers to the same question. The method involves asking the AI multiple times to respond to the same prompt and then using another AI model to cluster these responses based on their meanings, a technique known as semantic entropy. If the answers vary significantly, indicating high semantic entropy, they are likely hallucinations. This approach has shown to be 79% effective in identifying incorrect AI responses, outperforming other methods like “naive entropy” and “embedding regression.”

Sebastian Farquhar, a senior research fellow at Oxford University’s department of computer science, shared that this approach would have saved the lawyer who was fined for relying on a ChatGPT hallucination. The lawyer had used ChatGPT to draft a court filing, which included fictitious citations, resulting in a $5,000 fine. Farquhar’s team believes their method can drastically reduce such incidents by providing a means to gauge the reliability of AI outputs.

However, not all experts are convinced of the immediate effectiveness of this approach. Arvind Narayanan, a professor of computer science at Princeton University, acknowledged the study’s merit but advised caution. He pointed out that while the method shows promise, integrating it into real-world applications could be challenging. Narayanan also noted that hallucination rates have generally declined with the release of better models but believes that the issue is intrinsic to how large language models (LLMs) function and might never be fully eradicated.

The Oxford researchers’ strategy is to use one AI model to check the consistency of another. They ask the generative AI to produce multiple answers to the same question, then use a second AI model to assess the similarity of these responses, clustering them based on their meanings. High variation in answers, or high semantic entropy, signals a hallucination. This method doesn’t rely on subject-specific training data, making it versatile across different topics.

For instance, when asked about the largest moon in the solar system, a hallucinating AI might give inconsistent answers like “Titan” and “Ganymede.” The Oxford method’s second AI would cluster these responses and flag the inconsistency as a likely hallucination. This technique is particularly useful because it identifies when the AI is “confabulating,” offering varied incorrect answers rather than consistent ones.

The efficacy of this method was demonstrated with real-world examples, such as detecting inconsistencies in narratives about Freddie Frith, a renowned motorcycle racer. When asked multiple times, the AI provided different birth dates for Frith, which were successfully flagged by the Oxford method. This capability is crucial for applications in high-stakes environments, such as legal or medical fields, where incorrect information can have severe consequences.

Despite the method’s promise, it’s not without its limitations. The computational cost is high, and it requires significantly more processing power than standard AI operations. Moreover, this method only addresses one type of hallucination — confabulations — and doesn’t cover others like consistent inaccuracies due to flawed training data.

Google DeepMind is exploring parallel efforts to improve AI reliability, looking to incorporate “universal self-consistency” into their models. This would theoretically allow users to click a button for a certainty score, gauging the reliability of a given response. This approach, like Oxford’s, aims to provide more dependable AI interactions, especially in contexts where accuracy is non-negotiable.

While the battle against AI hallucinations continues, the University of Oxford’s research represents a significant step forward in making AI a more reliable partner in various aspects of life and work. Until these solutions are fully integrated and perfected, users are advised to treat AI outputs with caution, always double-checking critical information.

News Sources

- AI Strategies Series: 7 Ways to Overcome Hallucinations

- How to spot generative AI ‘hallucinations’ and prevent them

- Scientists Develop New Algorithm to Spot AI ‘Hallucinations’

- Researchers hope to quash AI hallucination bugs that stem from words with more than one meaning

- Researchers Say Chatbots ‘Policing’ Each Other Can Correct Some AI Hallucinations

Assisted by GAI and LLM Technologies

Additional Reading

- U.S. Proposes New AI Export Controls Amid Security Concerns

- From Silicon Valley to Sacramento: California’s Bold AI Regulatory Framework

Source: ComplexDiscovery OÜ