Editor’s Note: As artificial intelligence continues to evolve at an unprecedented pace, the complexities of managing its risks and ensuring ethical deployment become increasingly critical for organizations. This article highlights the latest efforts by leading institutions like MIT and the Cloud Security Alliance (CSA) to equip businesses with the tools and frameworks necessary to navigate these challenges. The AI Risk Repository and CSA’s insights into offensive security with AI offer valuable guidance for decision-makers striving to balance innovation with responsibility. For professionals in cybersecurity, information governance, and eDiscovery, understanding these resources is essential to safeguarding their organizations while harnessing the transformative power of AI.

Content Assessment: AI Risks and Ethics: Insights from MIT, Deloitte, and CSA

Information - 90%

Insight - 91%

Relevance - 92%

Objectivity - 90%

Authority - 88%

90%

Excellent

A short percentage-based assessment of the qualitative benefit expressed as a percentage of positive reception of the recent article from ComplexDiscovery OÜ titled, "AI Risks and Ethics: Insights from MIT, Deloitte, and CSA."

Industry News – Artificial Intelligence Beat

AI Risks and Ethics: Insights from MIT, Deloitte, and CSA

ComplexDiscovery Staff

As the rapid advancement of artificial intelligence (AI) brings both innovation and challenges, businesses are grappling with the myriad risks and ethical concerns associated with AI technologies. To support decision-makers in navigating this complex landscape, researchers from institutions like MIT and the Cloud Security Alliance (CSA) have been intensifying efforts to create comprehensive resources for AI risk assessment and ethical deployment.

The AI Risk Repository, spearheaded by MIT FutureTech’s incoming postdoc, Peter Slattery, is a significant initiative that consolidates information from 43 different taxonomies. This repository, comprising over 700 unique AI risks, aims to provide businesses with a robust framework for understanding and mitigating AI-related risks. “We wanted a fully comprehensive overview of AI risks to use as a checklist,” Slattery told VentureBeat. This meticulous curation has produced a valuable tool for organizations, especially those developing or deploying AI systems, to assess their risk exposure comprehensively.

The repository employs a two-dimensional classification system: risks are categorized based on their causes—considering factors such as the responsible entity, intent, and timing—and classified into seven domains, including discrimination, privacy, misinformation, and security. This structure is intended to help organizations tailor their risk mitigation strategies effectively. Neil Thompson, head of the MIT FutureTech Lab, emphasizes that the repository will be regularly updated with new risks, research findings, and trends to maintain its relevance and utility.

Beyond risk assessment, the ethical deployment of AI is another critical concern for businesses. Deloitte’s recent survey of 100 C-level executives sheds light on how companies approach AI ethics. Ensuring transparency in data usage emerged as a top priority, with 59% of respondents highlighting its importance. The survey also revealed that larger organizations, with annual revenues of $1 billion or more, were more proactive in integrating ethical frameworks and governance structures to foster technological innovation.

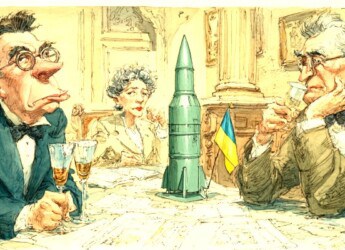

Unethical uses of AI, such as reinforcing biases or generating misinformation, pose substantial risks. The White House’s Office of Science and Technology Policy has addressed these issues by issuing a blueprint for an AI Bill of Rights, aimed at preventing discrimination and ensuring responsible AI usage. As part of this commitment, U.S. companies deploying AI for high-risk tasks must report to the Department of Commerce starting January 2024. Beena Ammanath, executive director of the Global Deloitte AI Institute, highlights the dual nature of AI, stating, “For any organization adopting AI, the technology presents both the potential for positive results and the risk of unintended outcomes.”

The CSA’s report “Using Artificial Intelligence (AI) for Offensive Security” further illustrates the transformative potential of AI in cybersecurity. Lead author Adam Lundqvist discusses how AI, particularly through large language models (LLMs), enhances offensive security by automating tasks such as reconnaissance, vulnerability analysis, and reporting. Despite these advancements, Lundqvist cautions that AI is “not a silver bullet” and must be integrated thoughtfully, balancing automation with human oversight.

According to Kirti Chopra, a lead author of the CSA report, organizations should adopt robust governance, risk, and compliance frameworks to ensure safe and ethical AI integration. Chopra insists on the necessity of human oversight to validate AI outputs, emphasizing that technology’s efficacy is limited by its training data and algorithms.

As businesses continue to explore AI’s capabilities, the development of comprehensive resources and ethical guidelines remains paramount. By leveraging tools like the AI Risk Repository and adhering to ethical standards, organizations can harness AI’s potential while mitigating its risks, ensuring a secure and innovative future.

News Sources

- MIT releases comprehensive database of AI risks

- CSA addresses using AI for offensive security in new report

- Businesses Seek to Balance AI Innovation and Ethics, According to Deloitte

- AI’s Transformative Role in Offensive Security and DevOps: A Strategic Shift in Cyber Defense

Assisted by GAI and LLM Technologies

Additional Reading

- eDiscovery Review in Transition: Manual Review, TAR, and the Role of AI

- The Workstream of eDiscovery: Considering Processes and Tasks

Source: ComplexDiscovery OÜ