|

|

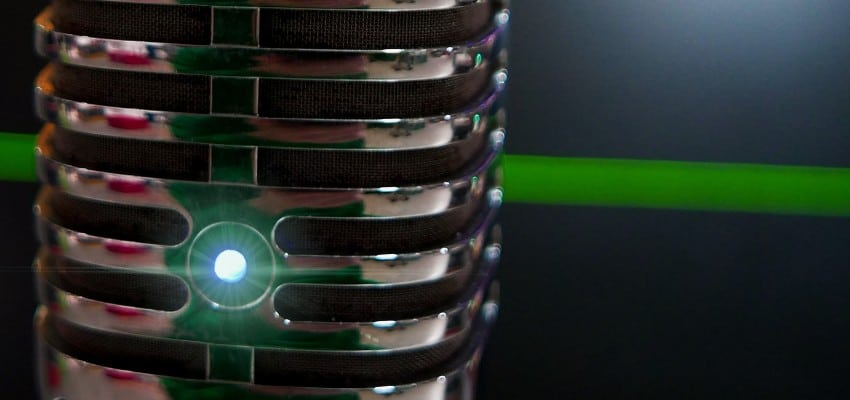

Content Assessment: Can You Hear Me? Analyzing the Privacy of Mute Buttons in Video Conferencing Apps

Information - 91%

Insight - 90%

Relevance - 87%

Objectivity - 93%

Authority - 92%

91%

Excellent

A short percentage-based assessment of the qualitative benefit of the recently published research report that provides a privacy analysis of the mute buttons in video conferencing applications.

Editor’s Note: From time to time, ComplexDiscovery highlights publicly available or privately purchasable announcements, content updates, and research from cyber, data, and legal discovery providers, research organizations, and ComplexDiscovery community members. While ComplexDiscovery regularly highlights this information, it does not assume any responsibility for content assertions.

To submit recommendations for consideration and inclusion in ComplexDiscovery’s cyber, data, and legal discovery-centric service, product, or research announcements, contact us today.

Background Note: Shared for the non-commercial educational benefit of cybersecurity, information governance, and eDiscovery professionals, this recently published research report investigates the privacy issues associated with the mute button in video conferencing applications, focusing on whether a mismatch exists between the user’s perception of the mute button and its actual behavior.

Research Report*

Are You Really Muted? A Privacy Analysis of Mute Buttons in Video Conferencing Apps

Yucheng Yang, Jack West†, George K. Thiruvathukal, Neil Klingensmith, and Kassem Fawaz

Abstract

Video conferencing apps (VCAs) make it possible for previously private spaces — bedrooms, living rooms, and kitchens — into semi-public extensions of the office. For the most part, users have accepted these apps in their personal space without much thought about the permission models that govern the use of their private data during meetings. While access to a device’s video camera is carefully controlled, little has been done to ensure the same level of privacy for accessing the microphone. In this work, we ask the question: what happens to the microphone data when a user clicks the mute button in a VCA? We first conduct a user study to analyze users’ understanding of the permission model of the mute button. Then, using runtime binary analysis tools, we trace raw audio flow in many popular VCAs as it traverses the app from the audio driver to the network. We find fragmented policies for dealing with microphone data among VCAs — some continuously monitor the microphone input during mute, and others do so periodically. One app transmits statistics of the audio to its telemetry servers while the app is muted. Using network traffic that we intercept en route to the telemetry server, we implement a proof-of-concept background activity classifier and demonstrate the feasibility of inferring the ongoing background activity during a meeting — cooking, cleaning, typing, etc. We achieved 81.9% macro accuracy on identifying six common background activities using intercepted outgoing telemetry packets when a user is muted.

Extract – Video Conferencing Apps (VCA) Privacy Policy (Section 6.3)

Few participants in our user study were aware of the data collection or sharing policies of popular VCAs. Around 70% of our participants believe that the mute button blocks the transmission of microphone data or disables the microphone altogether. VCA service providers should provide detailed definitions of data collection scenarios rather than generic statements about how they collect data about their users. All VCAs actively query the microphone when the user is muted, and they might have legitimate purposes. For example, Zoom alerts the user when they try to speak with their microphone muted. The privacy policies of these services need to be explicit about microphone access, which is not currently the case.

Are You Really Muted? A Privacy Analysis of Mute Buttons in Video Conferencing Apps

Read the original research report.

*Shared with permission (CC BY-NC-ND-2.0)

Additional Reading

- An eDiscovery Market Size Mashup: 2021-2026 Worldwide Software and Services Overview

- Defining Cyber Discovery? A Definition and Framework

Source: ComplexDiscovery